An auditor opens your export file, finds a closing balance of €47,500, and pulls up the source PDF. Page 3, bottom-right corner: €47,000. Different number. "Where does the difference come from? Who changed it?"

If your extraction system can't answer that question in under a minute, you have a problem. Not a "we should probably document this better" problem. A compliance problem. The kind where someone asks to see the paper trail and you realize there isn't one.

Manual processes have this figured out. Sticky notes, initials, strikethroughs, dated signatures in the margin. When Maria in accounting corrects a figure, she leaves evidence. When your AI extraction pipeline corrects a figure, it just... overwrites.

The data is correct. The trust is missing.

The compliance reality

Regulated industries don't just need correct numbers. They need provenance. Not "we're pretty sure this is right" but "here's the exact pixel on page 83, here's who validated it, here's the timestamp."

Financial services, insurance, accounting firms—they all live in audit territory. The question isn't just "what's the value?" It's "how did we arrive at this value?" And that second question has to survive a skeptical examiner who assumes you made a mistake until proven otherwise.

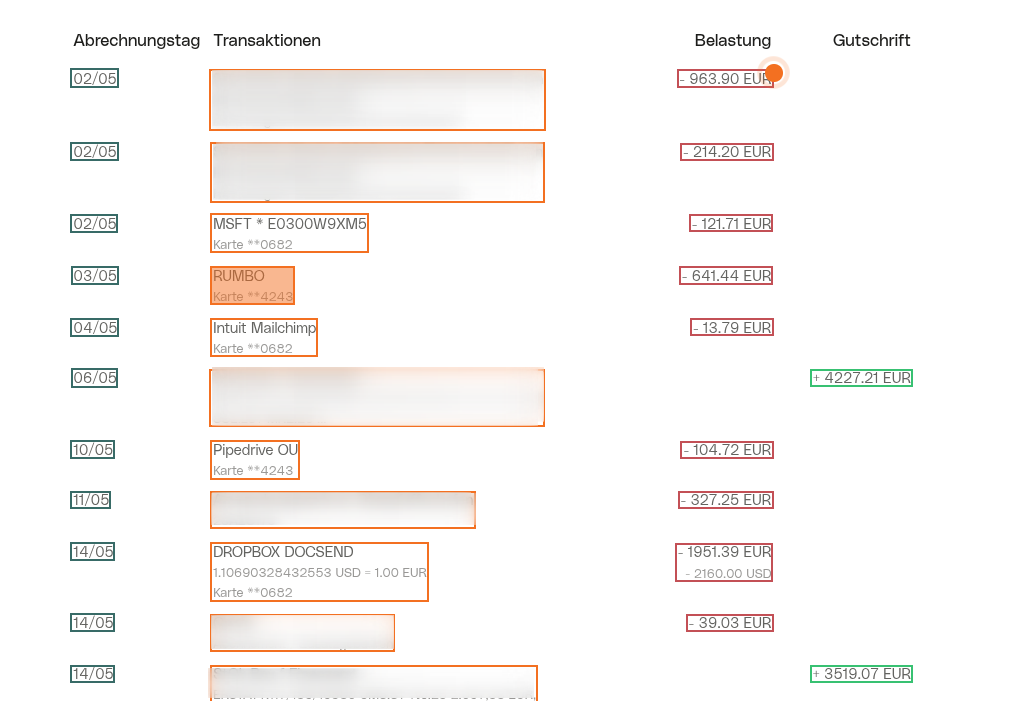

The irony is that automation was supposed to reduce risk. Fewer human touches, fewer transcription errors, more consistency. All true. But automation also created a new category of invisible changes. The OCR engine quietly interprets a smudged "7" as a "1." The normalization layer flips a negative balance to positive because that's how the bank renders debits. The extraction model picks one of two possible totals because the page had duplicate headers.

Each of these is a decision. Each of these changes the output. And unless you're tracking them, your audit trail has holes you can't see.

What mutations actually look like

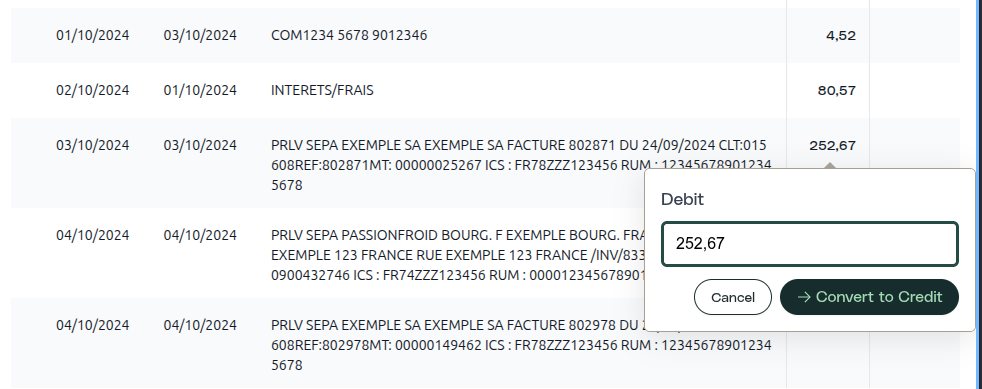

Before we look at mutations themselves, it's worth asking: how do humans end up editing extracted data in the first place?

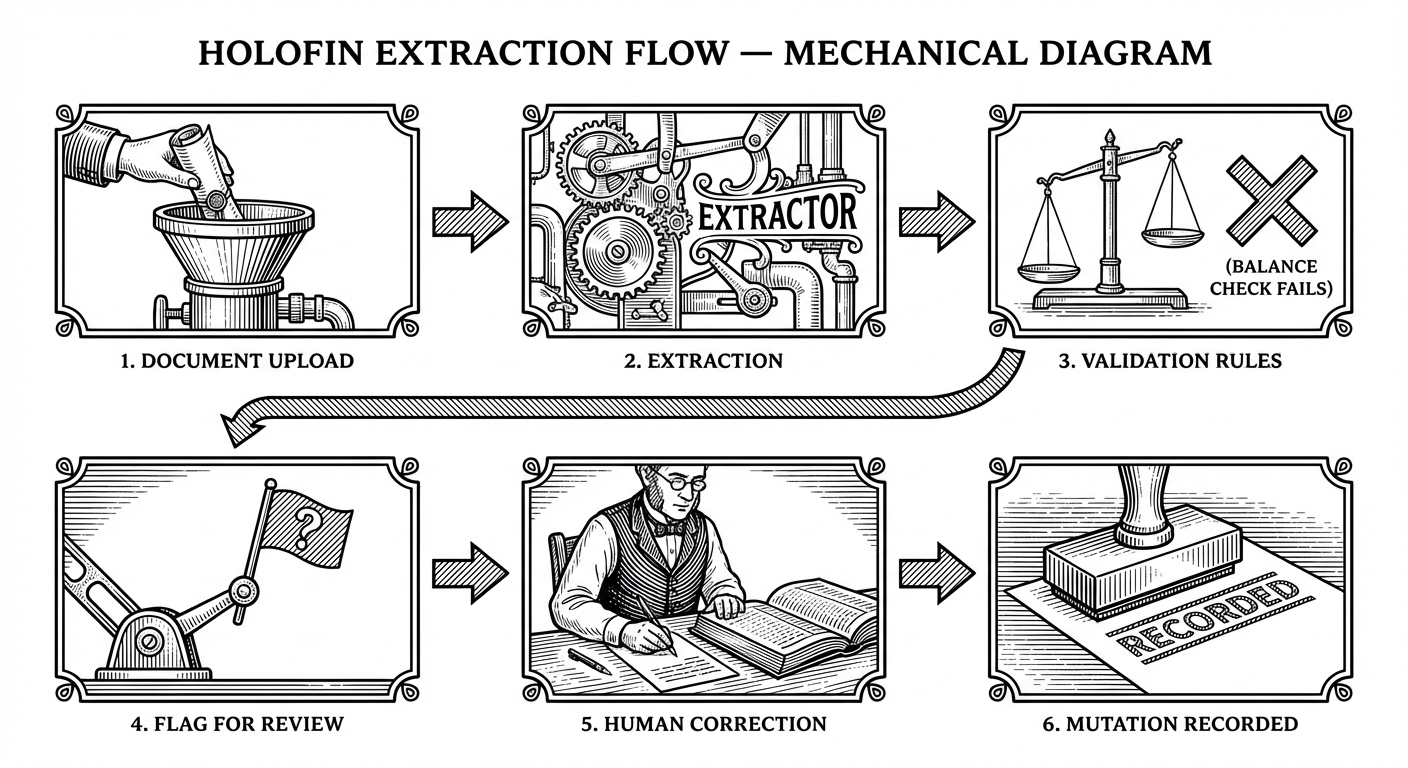

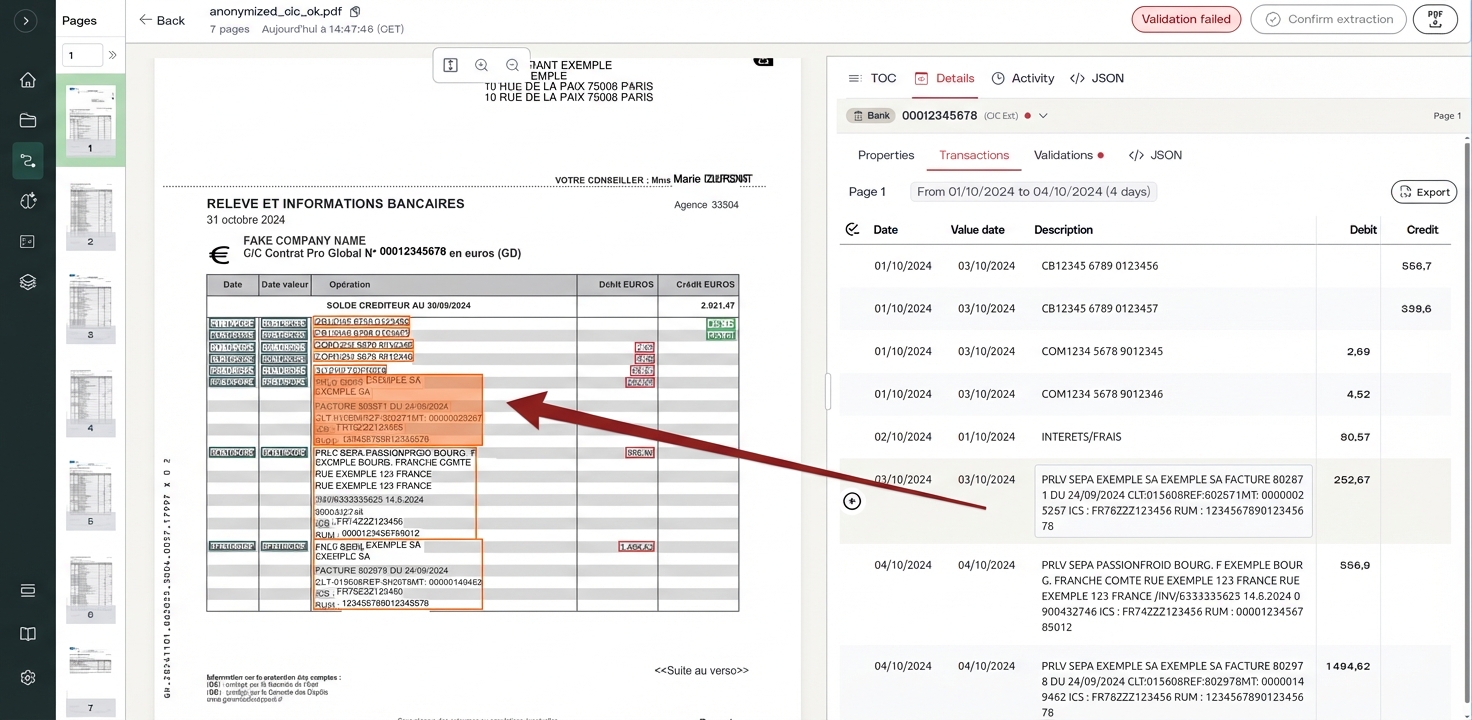

In Holofin, it starts with validation. After extraction, business rules run automatically against the data. For bank statements, that means balance equations: does the starting balance plus credits minus debits equal the closing balance? If the numbers don't reconcile within €0.02, the system flags it before anyone exports anything.

That flag is what pulls a human in. They open the document side by side with the source PDF and find the problem: a missed transaction, a misread digit, a duplicate that inflated the total. Without validation, the error travels silently into downstream systems. With it, the review is targeted—you're not asking someone to re-check 200 transactions, you're pointing them to "page 12, the credits don't add up, here's where to look."

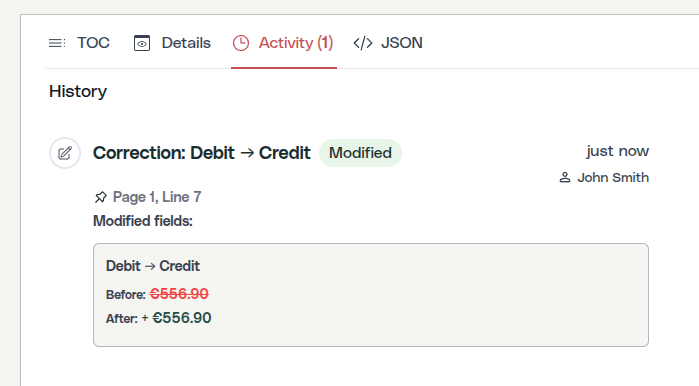

The human makes a correction. That correction is a mutation. And every mutation gets recorded with full attribution—because this is the audit trail:

A user adds a missing transaction.

OCR missed a faint line item—maybe the printer was running low on toner, maybe the scan was at a bad angle. The user sees the gap in the validation summary, opens the source PDF, finds the line, and adds the transaction manually. What gets recorded?

Who added it, when, what values they entered, and—critically—which page and coordinates the transaction comes from. The user is asserting "this data exists in the source document at this location." That assertion needs to be auditable.

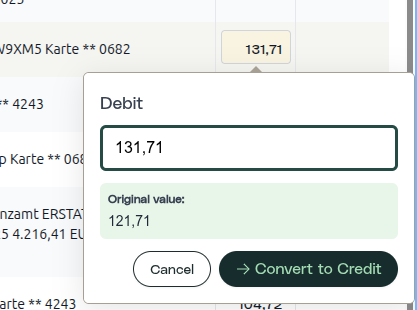

A user corrects an OCR error.

€1,238.45 was extracted as €1,236.45. On a bad scan, the OCR read an 8 as a 6. The validation flagged a balance mismatch of €2. The user opens the source PDF, spots the smudged digit, fixes it. What gets recorded?

Original value. New value. User. Timestamp. And the bounding box of the source text, so an auditor can visually verify the correction against the original document.

Every number has an address

Most extraction systems give you a value. A good extraction system gives you a value and its exact location on the source document.

In Holofin, every extracted field carries a bounding box—a set of coordinates that mark the precise rectangle on the page where the data was read. Not "page 3," but "page 3, 72% from the left edge, 45% down, this exact cluster of pixels."

This isn't a nice diagnostic detail. It's the foundation of the entire audit trail.

When an auditor questions a number, you don't just say "it came from the PDF." You show them. The source document opens with the relevant area highlighted. The extracted value sits next to the original. The auditor can see, with their own eyes, that the system read the right thing or understand exactly why a human corrected it.

This spatial link also catches a category of silent failures that text-only extraction misses entirely. Values mapped to the wrong column because the table had no gridlines. A total pulled from a subtotal row because the layout shifted mid-page. A header that spans two columns, causing every value below it to shift one cell to the right. Without coordinates, these errors produce plausible-looking output that passes every text-based check. With coordinates, you can verify that the number labeled "closing balance" actually came from the closing balance position on the page.

The bounding box turns "trust me" into "see for yourself."

This spatial link persists through every change. Corrections, deletions, restorations—each mutation keeps its source coordinates. Nothing is destroyed. Everything traces back to the pixels it came from.

When the auditor calls

Let's return to that auditor with the mismatched balance.

With a proper mutation trail, the answer is immediate:

"The AI extracted €47,000 from page 3. On January 15th at 14:32, Marie Dubois corrected it to €47,500—page 47 contains a manual adjustment entry that the initial extraction missed due to non-standard formatting. Here's the source document with both locations highlighted, and the timestamped correction record."

Compare that to: "Let me check with the team and get back to you."

The first response builds trust. The second triggers a deeper investigation.

Two views of the same truth

This entire trail surfaces in two places: the UI and the API.

In the Holofin UI, every extraction has an activity log. Users see each correction as it happened—who changed what, when, and from which value to which. It's the document's story, told chronologically. When a team member opens an extraction someone else worked on, they can reconstruct every decision without asking a single question.

In the API export, the same data comes structured. Every document export includes its mutation history alongside the extracted data, mutation type, user, timestamp, before/after values, source coordinates. Your downstream systems don't just receive numbers; they receive numbers with provenance. An accounting platform consuming the API can show its own auditors exactly where each figure originated and who validated it.

This matters because auditors don't all work in the same tool. Some will check the trail in Holofin directly. Others will want it in their own system. The data needs to be portable.

We've been in enough audit conversations to know: the question is never "is the data correct?" The question is always "can you prove it?"

The cost of not having this

Building audit trails takes engineering effort. Every mutation type needs a schema. Every state change needs a record. Every record needs to be queryable, exportable, retained for the legally required period.

The alternative is worse.

One audit finding that you can't explain creates more work than a year of mutation tracking. One client who loses confidence in your data provenance creates more damage than the engineering cost of getting it right.

The best extraction system is the one that can explain itself.

Data is the output. The audit trail is the trust.

Related Articles

HoloRecall: Show, Don't Tell

There's a moment in every classification project where you watch the model confidently get something wrong. Not a hard case. Not an ambiguous edge. Something a human would solve in half a second without thinking.

Your LLM Isn't a Document Pipeline

There's a moment in every AI project where the demo looks so good that your brain quietly starts deleting code. You watch a model "read" a bank statement and think: this is it. We can skip OCR. We can skip layout parsing. Maybe we can skip half the pipeline. In the movie version, someone presses Enter and JSON waterfalls out of the cloud.

PDFs Are For People, Not For Data

We love PDFs. They look the same on every device, they print beautifully at any size, and they’re the closest thing we have to digital paper. But every time someone on our team says "let’s just extract the data from the PDF," we feel an ancient PostScript daemon wake up and whisper: “I was born to paint pixels, not to structure your rows.”